Hello, I am currently a fifth-year Ph.D. student at the School of Information Science and Technology, University of Science and Technology of China (USTC), supervised by Prof. Zheng-Jun Zha. Previously, I received my B.E. from the Special Class for the Gifted Young, Chien-Shiung Wu College, Southeast University (SEU), majoring in Automation. In addition, I also work as a research intern at the Beijing Academy of Artificial Intelligence (BAAI) & GALBOT, working closely with Prof. He Wang.

My Ph.D. research mainly focuses on Machine Learning, with specific directions including Domain Generalization, Test-time Adaptation, and Continual Learning. During my internship, my research focuses on Embodied AI, with specific directions including Embodied Navigation, Vision-Language-Action Models, and Multimodal Large Models.

My research interests include enhancing the generalization and robustness of models’ perceptual capabilities in open-world environments, and enabling robots to perceive, understand, and act in the real world. In my future research career, I hope to pursue work that either drives influential and insightful advances in technology, or explores fundamental principles with scientific significance underlying challenging problems.

I am actively looking for postdoctoral opportunities. If you have a suitable position or collaboration in mind, please feel free to contact me by email.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

University of Science and Technology of China

-

Southeast University

Experience

-

Beijing Academy of Artificial Intelligence & GALBOT

Selected Publications (view all )

Machine Learning

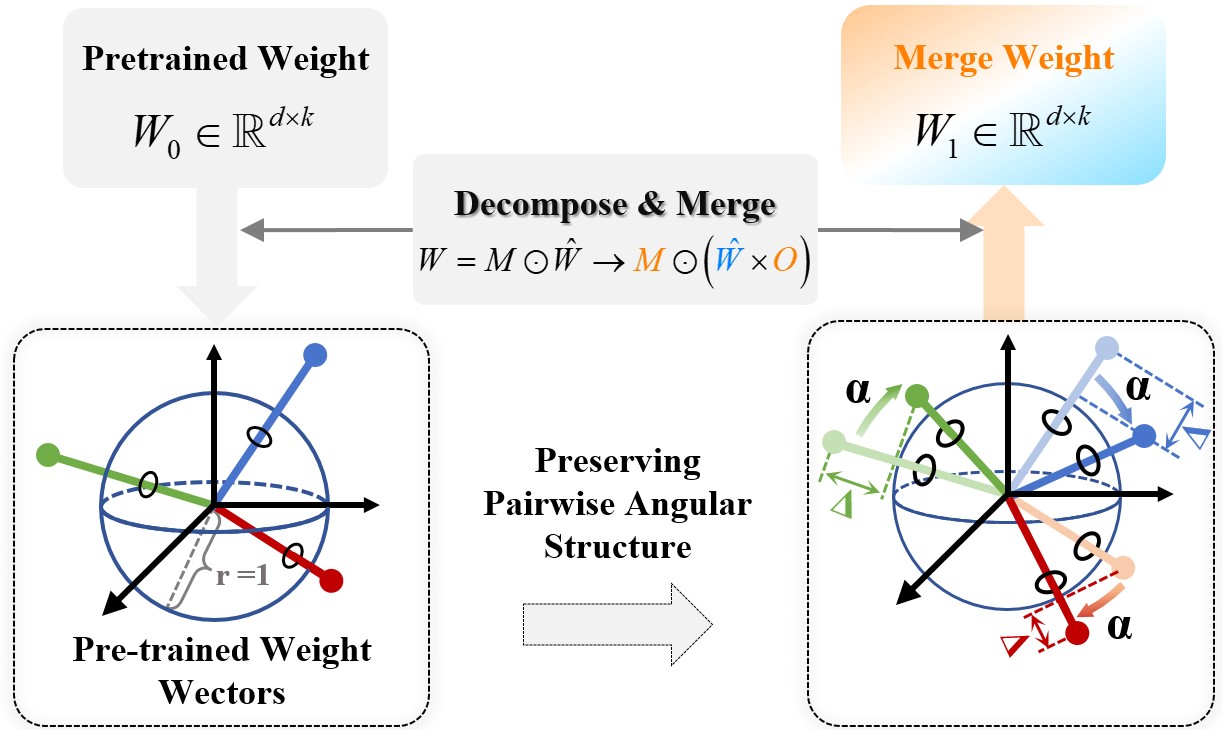

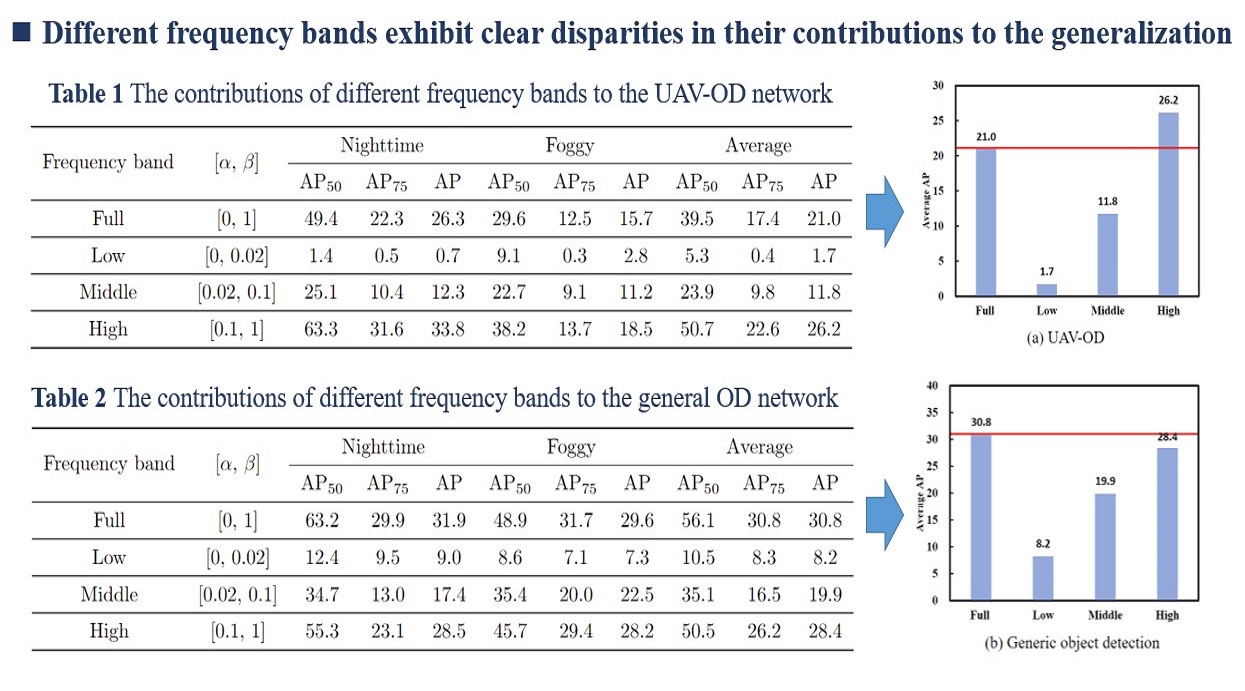

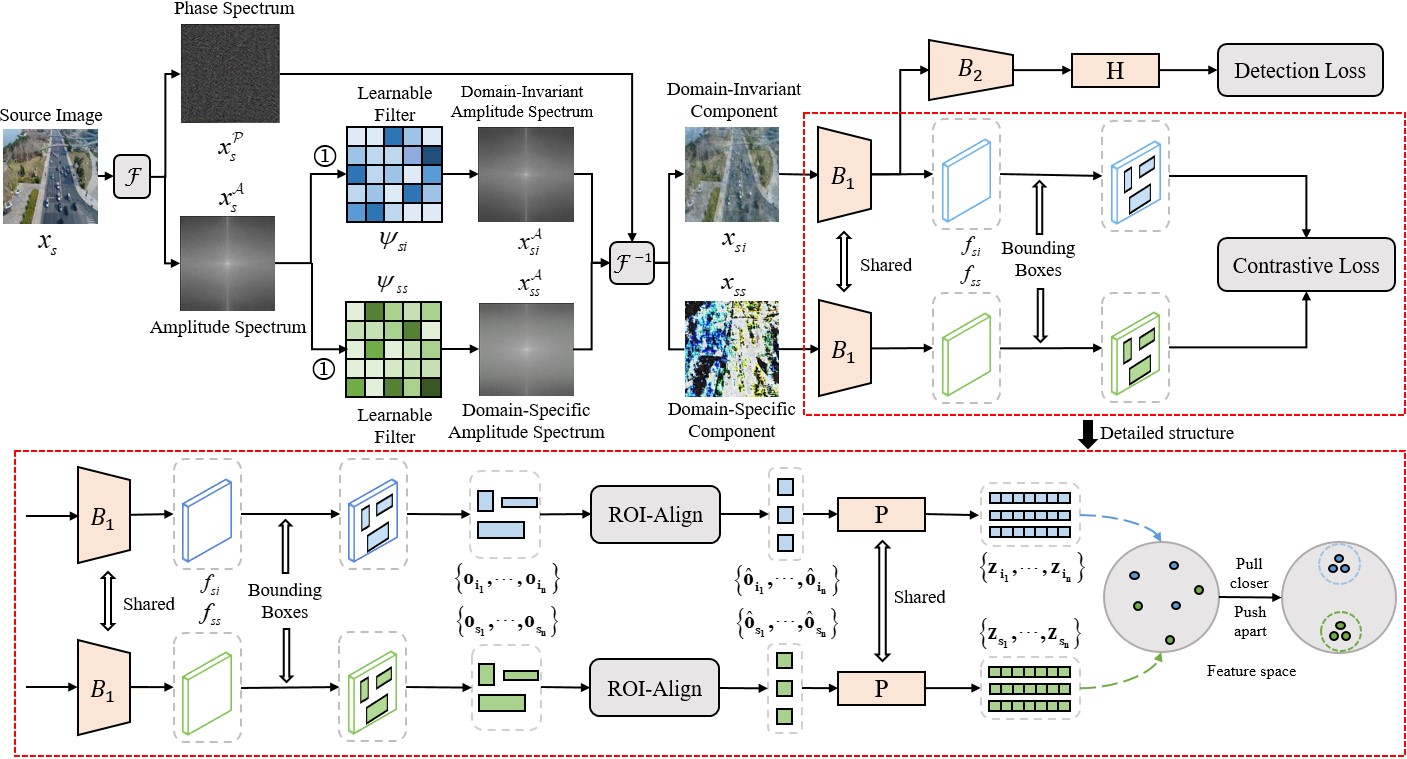

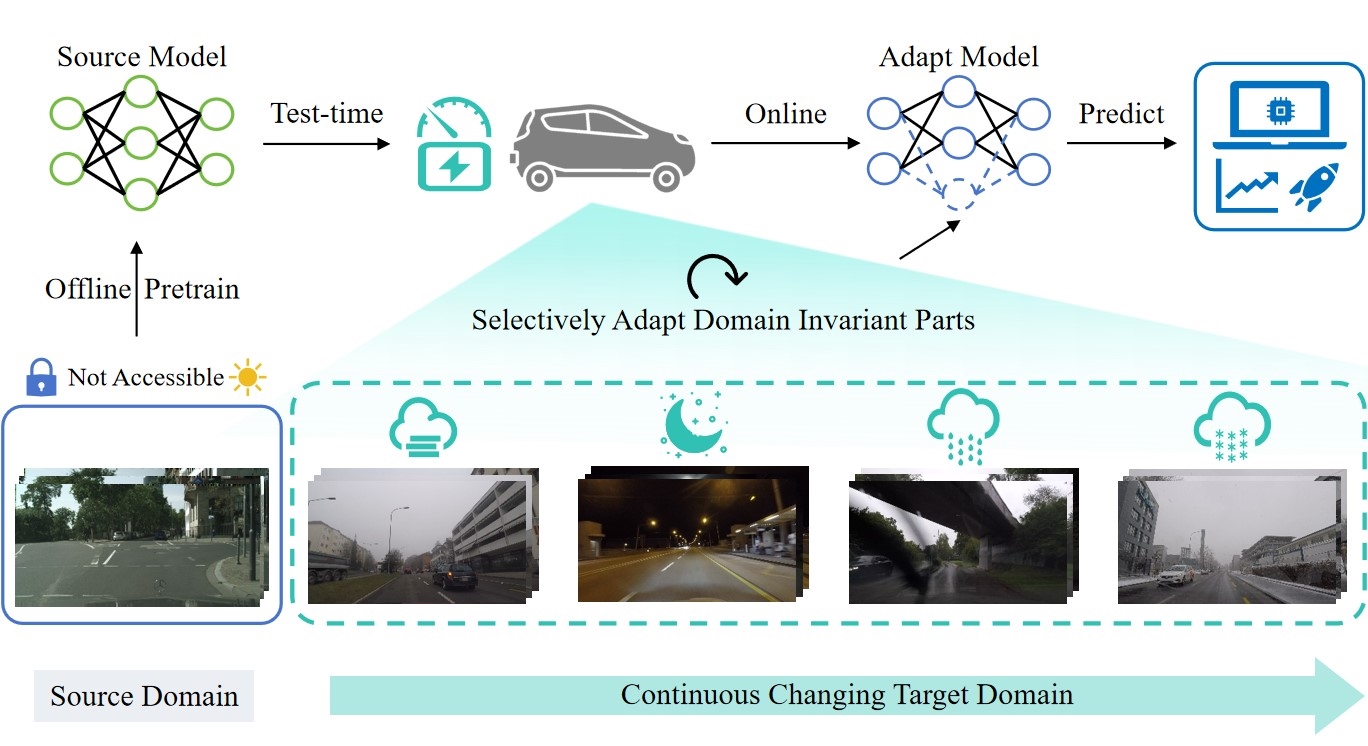

Efficient Test-time Adaptive Object Detection via Sensitivity-Guided Pruning

CVPR 2025 Oral (3.3% of all accepted papers)

During source-to-target domain transfer, not all source-learned features are beneficial, some can even degrade target performance.

[Paper] [Oral Presentation Slides] [Watch on Bilibili (Featured by VALSE)]

Efficient Test-time Adaptive Object Detection via Sensitivity-Guided Pruning

CVPR 2025 Oral (3.3% of all accepted papers)

During source-to-target domain transfer, not all source-learned features are beneficial, some can even degrade target performance.

[Paper] [Oral Presentation Slides] [Watch on Bilibili (Featured by VALSE)]

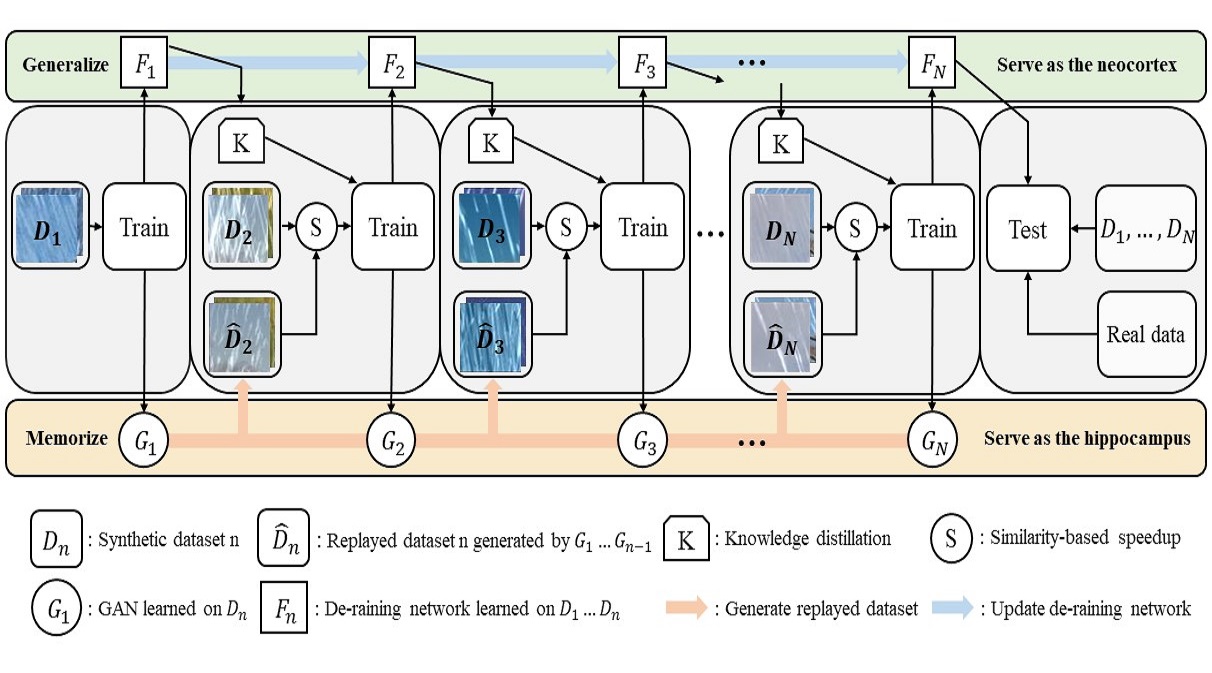

Towards Better De-raining Generalization via Rainy Characteristics Memorization and Replay

Manuscript under minor revision at TNNLS

We propose a brain-inspired continual learning framework by imitating the complementary learning mechanism of the human brain.

Towards Better De-raining Generalization via Rainy Characteristics Memorization and Replay

Manuscript under minor revision at TNNLS

We propose a brain-inspired continual learning framework by imitating the complementary learning mechanism of the human brain.

Embodied AI

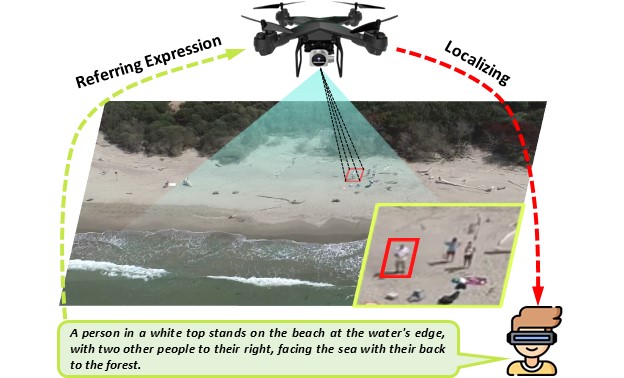

Uni-NaVid: A Video-based Vision-Language-Action Model for Unifying Embodied Navigation Tasks

RSS 2025

Uni-NaVid: A Video-based Vision-Language-Action Model for Unifying Embodied Navigation Tasks

RSS 2025

NaVid: Video-based VLM Plans the Next Step for Vision-and-Language Navigation

RSS 2024

[Paper] [Project Page] [Code] [Oral Presentation Slides] [NaVid on Baidu Encyclopedia]

NaVid: Video-based VLM Plans the Next Step for Vision-and-Language Navigation

RSS 2024

[Paper] [Project Page] [Code] [Oral Presentation Slides] [NaVid on Baidu Encyclopedia]